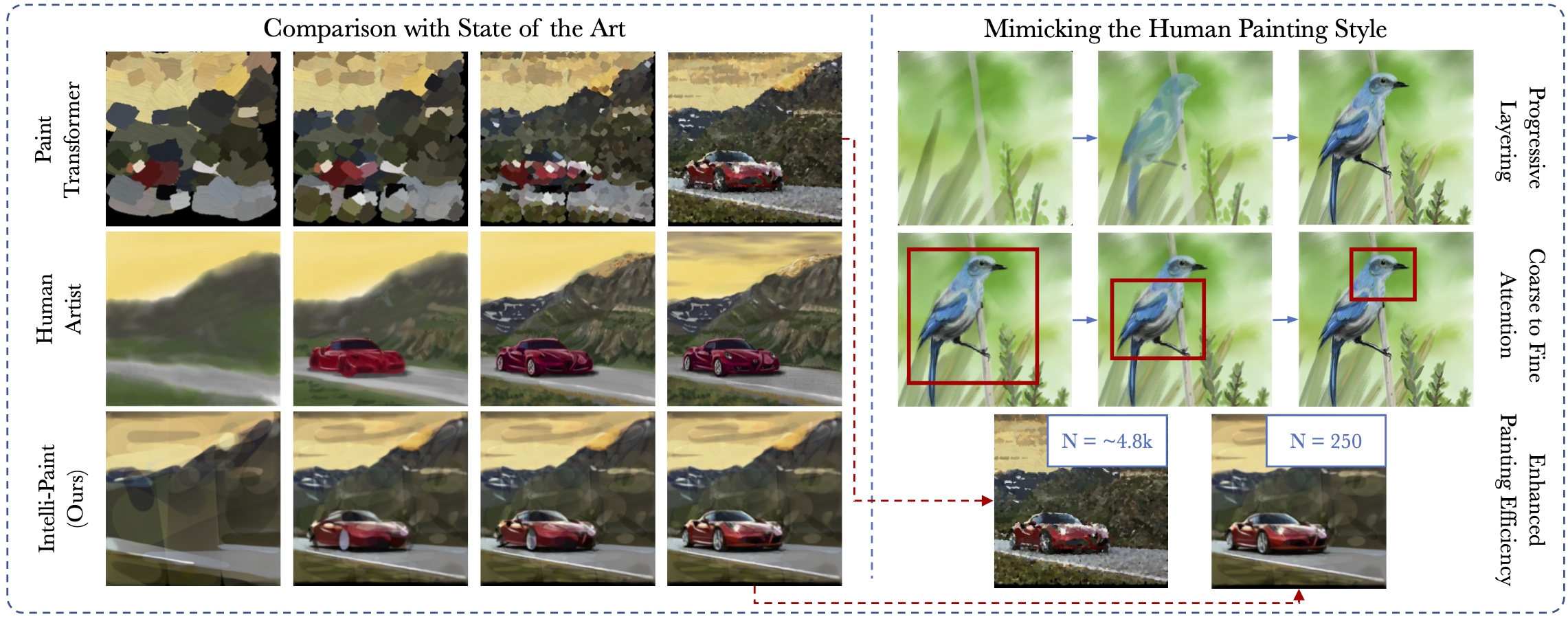

Stroke based rendering methods have recently become a popular solution for the generation of stylized paintings. However, the current research in this direction is focused mainly on the improvement of final canvas quality, and thus often fails to consider the intelligibility of the generated painting sequences to actual human users. In this work, we motivate the need to learn more human-intelligible painting sequences in order to facilitate the use of autonomous painting systems in a more interactive context (e.g. as a painting assistant tool for human users or for robotic painting applications). To this end, we propose a novel painting approach which learns to generate output canvases while exhibiting a painting style which is more relatable to human users. The proposed painting pipeline Intelli-Paint consists of 1) a progressive layering strategy which allows the agent to first paint a natural background scene before adding in each of the foreground objects in a progressive fashion. 2) We also introduce a novel sequential brushstroke guidance strategy which helps the painting agent to shift its attention between different image regions in a semantic-aware manner. 3) Finally, we propose a brushstroke regularization strategy which allows for ~60-80% reduction in the total number of required brushstrokes without any perceivable differences in the quality of generated canvases. Through both quantitative and qualitative results, we show that the resulting agents not only show enhanced efficiency in output canvas generation but also exhibit a more natural-looking painting style which would better assist human users express their ideas through digital artwork.

Interactive Painting Applications. The practical merits of a stroke based rendering approach over pixel based image stylization methods (e.g. using GANs, VAEs) relies on its ability to mimic the human artistic creation process. Infact, several previous works including Paint Transformer, Optim and RL describe this ability to mimic a human-like painting process as their motivation for using a brushstroke based approach for image generation. The idea is that once trained, the learned painting agent can then act as a painting assistant / teaching tool for human users (Paint Transformer, RL {Huang et al., 2019}).

Robotic Painting Applications. Robotic applications for expression of AI creativity are being increasingly explored. For instance Pindar Van's cloudpainter robot has gained widespread global attention for the automated creation of artistic paintings. Our contribution is significant in this direction, as our method not only learns a painting sequence which is more interpretable to actual human users, but more importantly it provides an *efficient painting plan* which would allow a robotic agent to paint a vivid scene using significantly less number of total brushstrokes as compared to previous works.

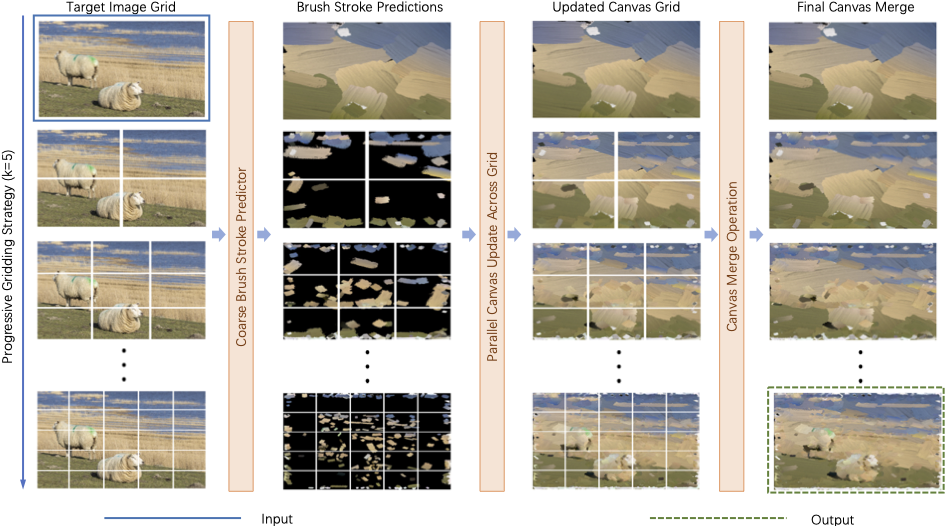

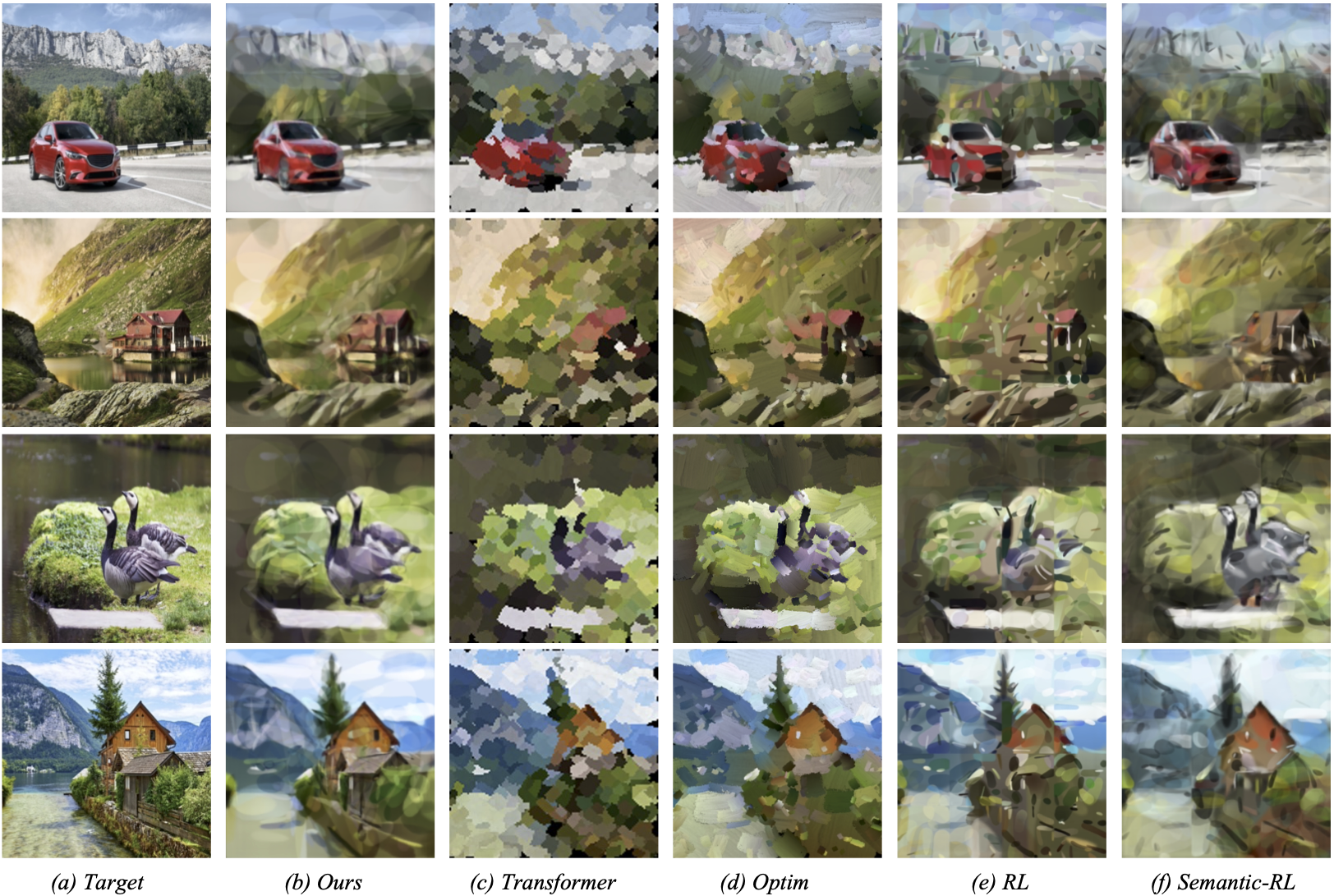

Despite the above mentioned motivations, the generation of competitive results using previous works is invariably dependent on a progressive grid-based division strategy. In this setting, the agent divides the overall image into successively finer grids, and then proceeds to paint each of them in parallel. Experimental analysis reveals that this not only reduces the efficiency of the final agent, but also leads to mechanical (grid-based) painting sequences (refer Fig. 2) which are not directly applicable to actual human users.

In order to address the need for more human-intelligible painting sequences, we propose a novel Intelli-Paint pipeline which learns to paint canvases while mimicking the human painting process using three main modules.

We next analyse the importance of each module in learning a more human-relatable painting style.

The human painting process is often progressive and multi-layered. That is, instead of painting everything on the canvas at once, humans often first paint a basic background layer before progressively adding each of the foreground objects on top of it (refer Fig. 1). However, such a strategy is hard to learn using previous works which directly minimize the pixel wise distance the generated canvas and the target image.

As shown in Fig. 4 below, we propose a progressive layering module, which much like a human artist, allows the painted canvas to evolve in multiple successive layers.

Once the background layer has been painted, the sequential brush-stroke guidance strategy helps our method to add different foreground features in a semantic-aware manner.

The current works on autonomous painting are often limited to using (an almost) fixed brush stroke budget irrespective of the complexity of the target image. Experiments reveal that this not reduces the efficiency of the generated painting sequence but also results in redundant / overlapping brushstroke patterns (refer Fig. 4) which impart an unnatural painting style to the final agent. As shown in Fig. 6 below, we propose a brushstroke regularization formulation which removes the above painting redundancies, thereby considerably improving both painting efficiency and human-relatability of our approach.

In this paper, we emphasize that the practical merits of an autonomous painting system should be evaluated not only by the quality of generated canvas but also by the interpretability of the corresponding painting sequence by actual human artists. To this end, we propose a novel Intelli-Paint pipeline which uses progressive layering to allow for a more human-like evolution of the painted canvas. The painting agent focuses on different image areas through a sequence of coarse-to-fine localized attention windows and is able to paint detailed scenes while using a limited number of brushstrokes. Experiments reveal that in comparison with previous state-of-the-art methods, our approach not only shows improved painting efficiency but also exhibits a painting style which is much more relatable to actual human users. We hope our work opens new avenues for the further development of interactive and robotic painting applications in the real world.

@inproceedings{singh2022intelli,

title={Intelli-Paint: Towards Developing More Human-Intelligible Painting Agents},

author={Singh, Jaskirat and Smith, Cameron and Echevarria, Jose and Zheng, Liang},

booktitle={European Conference on Computer Vision},

pages={685--701},

year={2022},

organization={Springer}

}